Longitudinal Assessment of Radiosurgery Response in Small Brain Metastases: A Comprehensive Framework for Automated Tumor Segmentation and Monitoring on Serial MRI

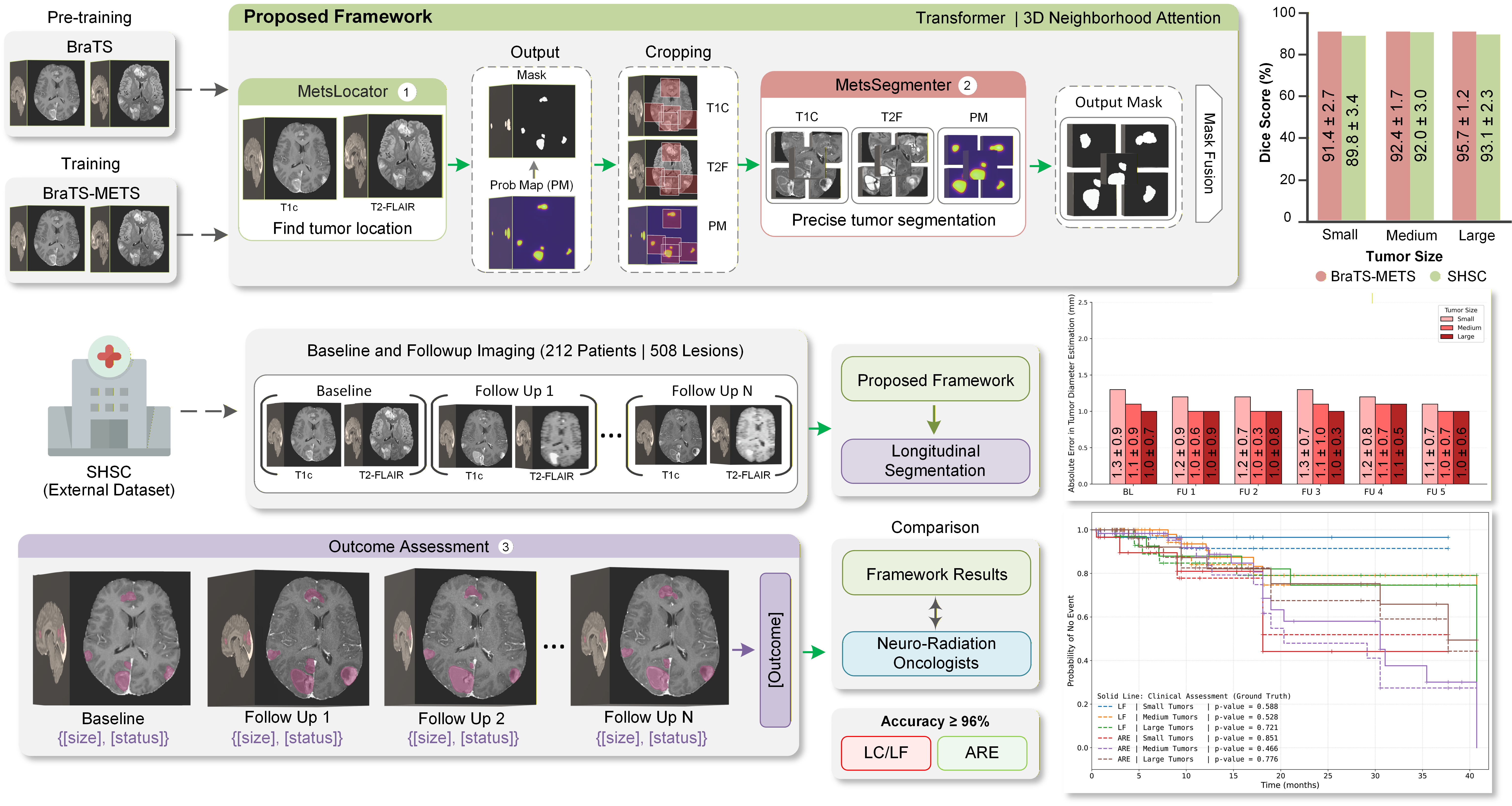

An automated deep learning framework for longitudinal segmentation and radiotherapy outcome assessment of brain metastases using standard serial MRI. The method integrates a transformer-based architecture with a 3D neighborhood attention mechanism to achieve precise tumor delineation across a wide range of lesion sizes, with particular emphasis on small metastases that are often missed by existing approaches.

- Clinical motivation: Manual tumor delineation on serial MRI is labor-intensive, prone to inter-observer variability, and especially challenging for small lesions that critically impact radiotherapy outcome assessment.

- Framework: A multi-step U-shaped encoder–decoder architecture incorporating transformer blocks with 3D neighborhood attention and probability-map guidance.

- Cascaded design: The framework consists of two networks, MetsLocator for tumor localization and MetsSegmenter for precise lesion-level segmentation, followed by an automated outcome assessment module.

- Input data: 3D T1-contrast and T2-FLAIR MRI volumes (512 × 512 × 200 voxels) acquired at baseline and multiple follow-up sessions.

- Datasets: Trained on BraTS and BraTS-METS and evaluated on an independent external cohort of 212 patients with 508 brain metastases treated with stereotactic radiosurgery.

- Segmentation performance: Achieved Dice scores of 89.8 ± 3.4% for tumors < 1 cm, 92.0 ± 3.0% for tumors between 1 and 2 cm, and 93.1 ± 2.3% for tumors > 2 cm.

- Longitudinal assessment: Accurately monitors tumor size dynamics and automatically assesses local control, local failure, and adverse radiation effects with accuracies exceeding 96% across tumor size categories.

- Impact: Demonstrates strong generalizability and substantially outperforms state-of-the-art segmentation models, particularly for small lesions, enabling scalable and consistent radiotherapy outcome assessment in neuro-oncology.

Attention-Guided Deep Learning of Chemical Exchange Saturation Transfer Magnetic Resonance Imaging to Differentiate Between Tumor Progression and Radiation Necrosis in Brain Metastasis

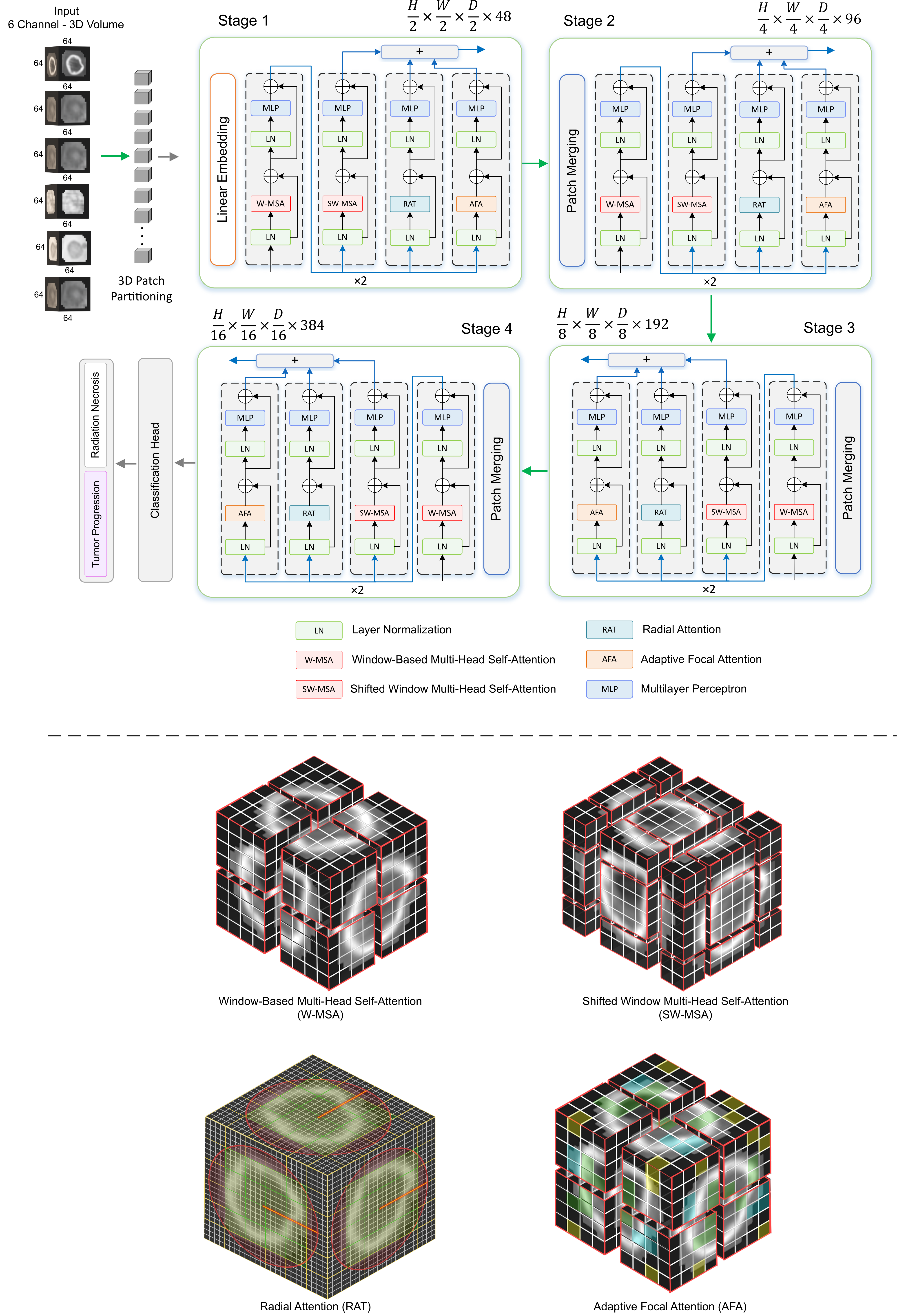

A deep learning framework that analyzes multimodal chemical exchange saturation transfer (CEST) MRI, T1/T2 mapping, and structural MRI to differentiate tumor progression (TP) from radiation necrosis (RN) in stereotactic radiosurgery–treated brain metastases. The model leverages a 3D transformer architecture with two novel attention mechanisms to enhance multimodal feature fusion and improve diagnostic accuracy.

- Clinical need: SRS-treated brain metastases often develop radiation necrosis (RN), which is extremely difficult to distinguish from true tumor progression (TP) on routine MRI.

- Dataset: Multimodal MRI from 93 patients (230 lesions), including AmideMTR, rNOEMTR, T1/T2 maps, T1c, and T2-FLAIR; outcomes confirmed by histopathology or ≥6-month clinical follow-up.

- Model: Developed a 3D transformer-based classifier incorporating two new attention mechanisms designed specifically for multimodal MRI fusion.

- Training/testing split: 184 lesions for training, 46 lesions for independent validation.

- Key finding: Dual-channel models achieved AUC 0.76–0.78, while fusing CEST maps with structural MRI significantly improved performance to AUC 0.84–0.85.

- Best performance: Using all six MRI modalities yielded AUC = 0.87 ± 0.01 for differentiating TP vs RN.

- Impact: First deep learning framework to automatically analyze CEST MRI for this task; shows strong potential for improving diagnostic confidence and treatment decision-making in post-SRS patients.

Track, Measure, Evaluate: A Clinically Aligned Pipeline for Automatic Radiotherapy Response Assessment in Brain Tumors

SoFTNet (Slow/Fast Thinking Network): A Concept-controlled Deep Learning Architecture for Interpretable Image Classification

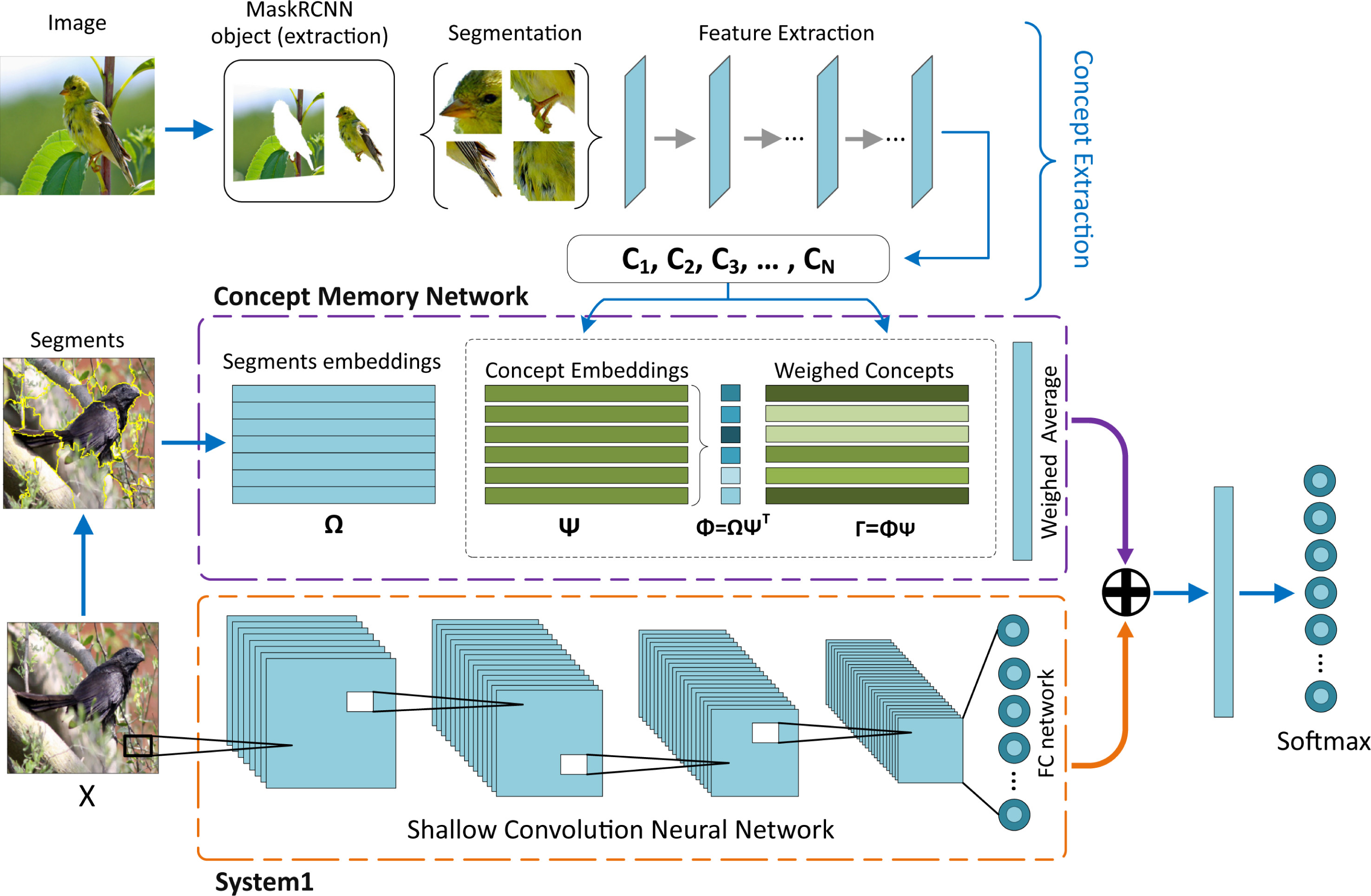

A controllable deep learning architecture that separates fast, low-level feature extraction from slow, high-level concept-based reasoning. SoFTNet enables users to define and control human-meaningful concepts and uses them directly for image classification, providing interpretable predictions inspired by the dual-process theory of human thinking.

- Problem: Standard deep classifiers are accurate but difficult to interpret, and most existing explanation methods operate on low-level features rather than explicit, high-level concepts.

- Concept extraction: Introduces a data-driven method to extract meaningful concepts, forming a concept space that can be inspected and controlled by users.

- Architecture: Proposes SoFTNet, a dual-stream model combining a shallow CNN for fast, low-level processing with a Concept Memory Network for slow, transparent, concept-based reasoning.

- Baseline method: Defines Concept-based Deep k-Nearest Neighbors (CDkNN), a hardened form of the Concept Memory Network and a variant of DkNN, to benchmark concept-based interpretability methods.

- Datasets: Evaluated on CUB-200-2011 (fine-grained bird species classification) and STL-10 (object recognition) to test both fine-grained and generic image classification tasks.

- Results: Achieves performance comparable to state-of-the-art non-interpretable models while outperforming other interpretable approaches, demonstrating that strong accuracy and convincing concept-level explanations can be achieved simultaneously.

Exciting New Work in Progress

Additional projects and publications will be featured here shortly.